What is an automated IO-Link data pipeline?

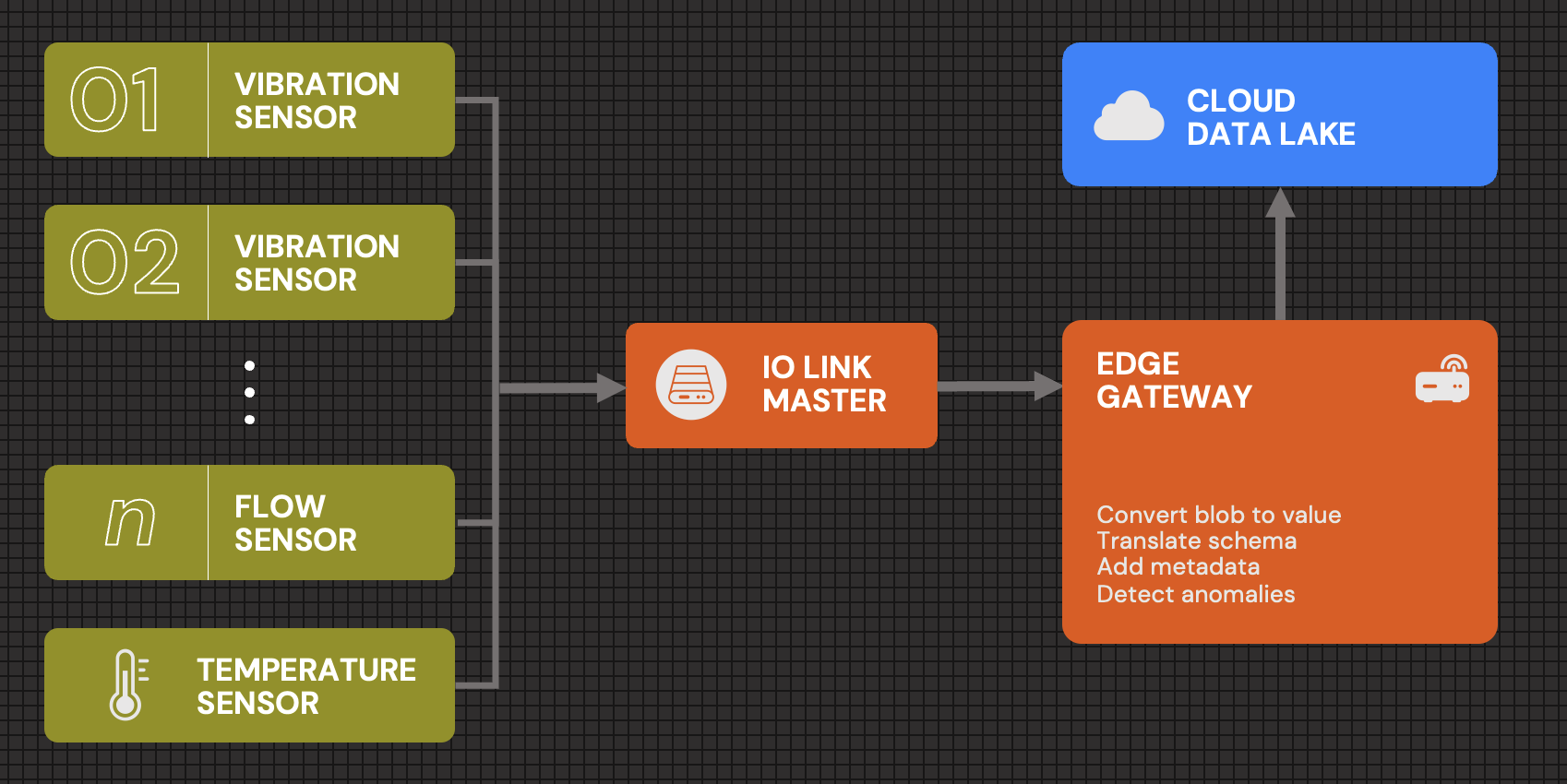

IO-Link sensors are widely used in industrial automation applications. They are also used in AI applications, such as predictive maintenance and efficiency optimization. In these cases, IO-Link sensor data is sent to the cloud and consumed by AI inference engines.

IO-Link Sensors for Large Scale AI Applications

The vast variety of IO-Link sensors pose a challenge to building large-scale AI applications. Many IO-Link sensor types exist from vibration to temperature to pressure to flow, and more sensors can be converted to IO-Link via converters such as an analog-to-digital converter. Envision an AI application that supports many different types of IO-Link sensors, data formats, and data rates, deployed in thousands of locations across the globe. How do we automate the process of setting up these IO-Link sensors, masters, and gateways?

High speed sensor data is a must for AI applications. When it comes to industrial data solutions, such as predictive maintenance or optimizing efficiency, high-speed sensor data often become a requirement. High-speed IO-Link data can be extracted through Modbus or blob transfer, and the solution needs to support these modes.

IO-Link sensors should be provisioned automatically. The solution needs to automatically recognize a sensor as it is plugged into the IO-Link master. This enables anyone to install the IO-Link sensors. They could simply plug the IO-Link sensor into any port of the IO-Link master, and the sensor is recognized and the proper IODD file is configured. This solution provisioning is all done automatically at power-up, which significantly simplifies the installation process.

IO-Link data workflows should be configurable and customizable. Customers need a customizable data workflow to configure data rates, data volume, data schema, metadata, data warehouse credentials, and data processing functions such as data fusion, event generation, and anomaly detection. At hardware power-up, the right data workflow for that customer needs to be automatically configured and delivered to the edge gateway. In addition, that customer may also require a dedicated, secure communication channel and a data workflow editor to customize the data workflow on their own.

Data transmission needs to be reliable. When the network is down, sensor data needs to be buffered locally in the gateway, and transmitted to the cloud when the network comes back up. Additionally, the customer may ask for the data on-demand feature. In this case, not all sensor data is transmitted to the cloud due to either bandwidth or cost constraints. Instead, only critical data is sent to the cloud in real-time and the remaining data is stored in the gateway. Only when a particular condition occurs, such as an alarm or an event, a chunk of data is sent to the cloud either automatically or manually.

Learn more about automated IO-Link data pipelines

A configurable and scalable IO-Link data pipeline enables you to receive high-quality, reliable data at scale. Please contact us or get a demo to learn more about our IO-Link and other edge data pipeline solutions.